Avid Amoeba

- 4 Posts

- 27 Comments

211·7 days ago

211·7 days agoYeah, that’s all there’s to it, along with pure ignorance. In a past not so ideologically developed life, I’ve written code under Apache 2 because it was “more free.” Understanding licenses, their implications, the ideologies behind them and their socioeconomic effects isn’t trivial. People certainly aren’t born educated in those, and often they reach for the code editor before that.

13·10 days ago

13·10 days agoElon Musk’s automaker has been backsliding in China for the past five consecutive months on a year-on-year basis, according to data from the country’s Passenger Car Association. Tesla’s shipments plunged 49% in February from a year earlier to just 30,688 vehicles, the lowest monthly figure since way back in July 2022, when it shipped just 28,217 EVs — and that was in the middle of Covid.

☺️

2·10 days ago

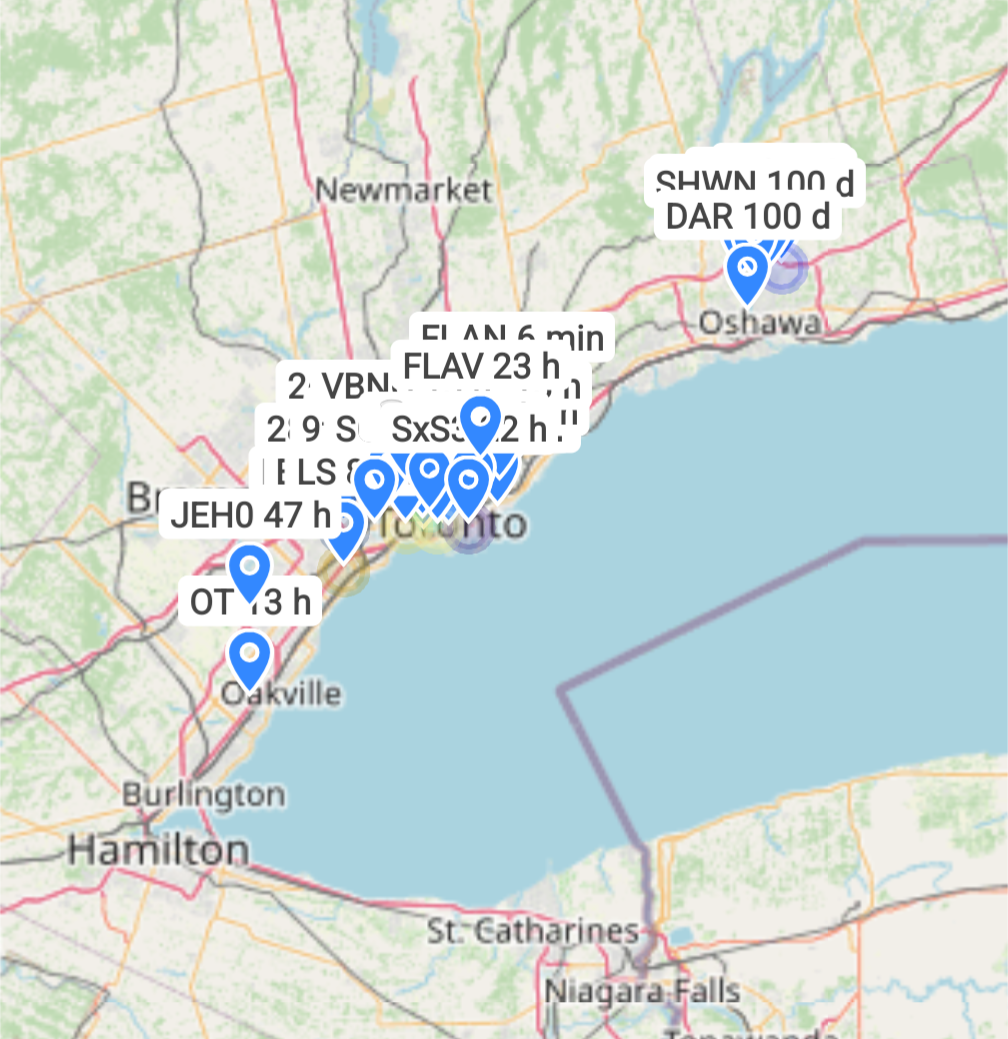

2·10 days agoYeah, I’m in the Greater Toronto and Hamilton Area (GTHA) in Canada. There’s 40+ nodes on the map that I’ve discovered by sitting on my balcony and 70+ nodes altogether:

I expected way fewer than that!

2·10 days ago

2·10 days agoYup. There are people occasionally writing in LongFast.

1·10 days ago

1·10 days agoI know, I kid, but yes, I just got it because it’s cheap enough to try. In reality the only use case I envision right now is have a couple of units in the drawer for emergency scenarios.

7·10 days ago

7·10 days agoStop attacking me. 🥹

But yeah, no use case other than - checking if it works. I’ll probably setup a standalone node on my balcony and leave it be to strengthen the network.

16·10 days ago

16·10 days agoShe goes out to talk about topics she’s not well versed in without doing enough research and says outright wrong stuff. In general, scientists who specialize in a field are often no better than a layman in the fields they have no background in. She’s not the only one who does this. Some scientists merely share uninformed opinions. Others do it for money. They build a persona that is imbued with trust by their existing expertise then they use this trust to keep pumping out other material for profit. That’s Sabine. Also Jordan Peterson. No they’re not the same but the scheme is. Laymen don’t know any better and absorb the material, correct or incorrect, since they don’t have the background to recognize when it’s bullshit.

21·11 days ago

21·11 days agoI just got a unit couple of days ago and it… just works. It’s quite impressive.

254·11 days ago

254·11 days agoSabine is an idiot but she’s probably not wrong today.

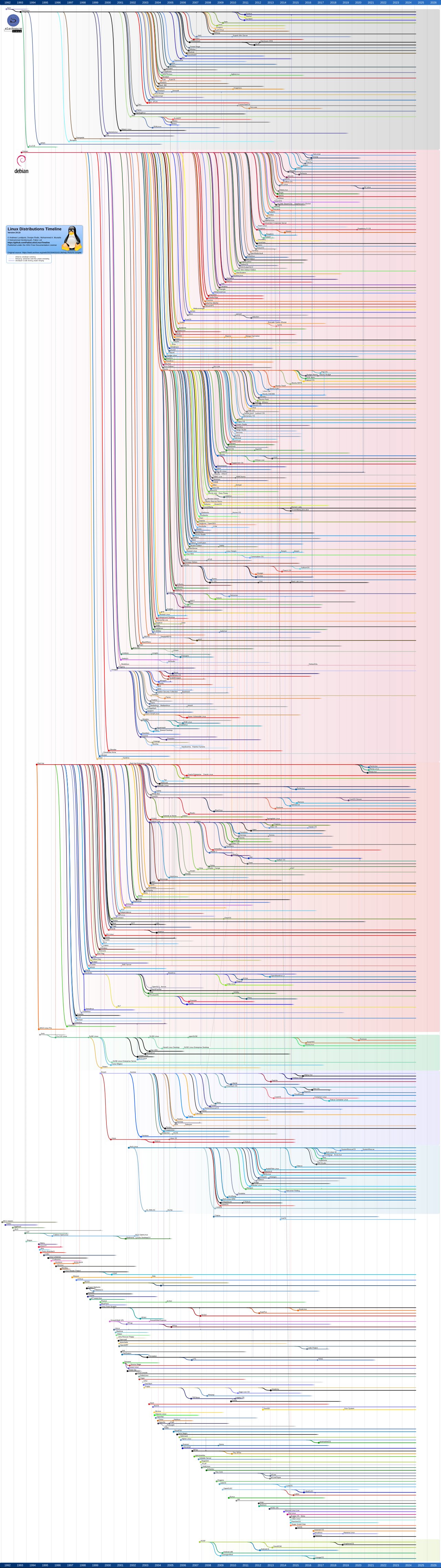

A bit more useful, puts the different parent distros to scale:

High-res source: https://en.wikipedia.org/wiki/List_of_Linux_distributions?wprov=sfla1

2·13 days ago

2·13 days agoThis is nice but it appears to be around A55 performance which is similar to the SiFive cores. I’m eager to see high speed cores that match the upper end of the ARM core range. And if they’re happen to be open source…

7·14 days ago

7·14 days agoGive us Banana Pi with SMIC-made, fast RISC-V processor. That’ll be the beginning of the end for ARM and all the report you need.

On desktop, yeah. Unity > GNOME, upstart > systems, snap. I don’t fuck with snap, I just use it as intended, I don’t try to remove it. I think I started actively using it in 2016. As a software developer I understand that only the happy path is reasonably tested so I try not to go too far out of it. 😂

I typically wait for the LTS point release before upgrading. I check the release notes. I check if anything is broken after the upgrade, fix as needed. I’m sure I’ve done some stuff when the migration to GNOME happened. But that’s to be expected when a major component change occurs. If you had some non-default config or workflow, it might require rework. E.g. some custom PulseAudio config broke on my laptop with the migration to Pipewire in 24.04. But on that legendary desktop install, the only unexpected breakage was during an upgrade when the power went out. Luckily upgrades are just apt operations so I was able to recover and finish the upgrade manually.

I think a friend is running a 2012 or 2010 install. 🥲

And I’ve also swapped multiple hardware platforms on this install. 😂 Went AMD > Intel > AMD > more AMD. Swapped SSDs, went single to mirror, increased in size.

I mean… once you kick the Windows-brain reinstall habit and you learn enough, the automatic instinct upon something unexpected becomes to investigate and fix it. Reinstall is just so much more laborious on a customized machine.

Interesting. We use it for work since 2016 (high hundreds of workstations) and I’ve used it since 2005 on variety of machines and use cases without significant issues. We’ve also used it to operate a couple of datacenters (OpenStack private clouds) with good results. That said I’ve been using LTS exclusively since 2014 and don’t use PPAs since 2018-20 and it’s been solid. My main machine hasn’t been reinstalled since the initial install in 2014.

Debian stable. It’s been here for 30 years, it’s the largest community OS, it’ll likely be here in 30 years (or until we destroy ourselves). Any derivative is subject to higher probability of additional issues, stoppage of development in the long run, etc.

If you’re extra lazy, Ubuntu LTS with Ubuntu Pro (free) enabled. You could use that for 10 years (or until Canonical cancels it) before you need to upgrade. Ubuntu is the least risky alternative for boring operation since it’s used in the enterprise and Canonical is profitable. The risk there is Canonical doing an IPO and Ubuntu going the way of tightening access like Red Hat did.

4·18 days ago

4·18 days agoIt’s okay, all they have to do is get a bid from another Big Tech company that doesn’t have home stuff like Amazon. Failing that, there’s always private equity. 🥲

The machine that was last installed in 2014 is Ubuntu LTS. It’s been upgraded through all the LTS releases since then. Currently on 22.04 with the free Ubuntu Pro enabled. I use a mix of Ubuntu LTS and Debian stable on other machines. For example my laptop is on Debian 12. Debian has been the most reliable OS and community for over 30 years and I believe it’ll still be around 30 years from now, if we haven’t destroyed ourselves. 😂

If it got great WiFi reception, that would be a killer.